A Day in TensorFlow Roadshow

After having started as an internal project developed by researchers from Google Brain team, TensorFlow has now become the most loved software library of 2018(Stack Overflow’s 2018 survey). Google has made TensorFlow open source in 2015 and ever since then, it has been continuously evolving and also growing in terms of community. This has been an encouraging factor both for Google to make it better and accessible to more researchers and also to the Machine Learning community to try the library and use it to build models that in turn has solved many crucial problems already. Paying attention to the community’s feedback has really helped TensorFlow and the TensorFlow team has been engaging with the community in several ways. Yesterday, the TensorFlow team had organised a roadshow for the Machine Learning community in Bangalore and it had wonderful sessions.

The roadshow had a full attendance and the developer community was welcomed and addressed by Karthik Padmanabhan, Developer Relations Lead, Google. Over the past few years, Machine Learning and more specifically Deep Learning have changed a lot of things about how technology impacts human lives. The progress in Deep Learning has led to rapid advancements in Computer Vision and NLP related tasks. With the help of all this progress, ML has made many things possible which previously were not even thought about. TensorFlow, as a library that helps in building Machine Learning solutions, is driving it forward by enabling the researchers to transform their problem-solving ideas into reality. Dushyantsinh Jadeja started the first session of the day emphasising on how inevitable ML has become in our lives and how TensorFlow has already helped in building some life-changing solutions.

TensorFlow is used to solve many problems like — in Astrology to detect new solar systems and planets, to avoid deforestation by using sensors for real-time detection and to automatically alert any illegal activities, to assess the quality of grain and better assist farmers and agriculture and most positive of all, in the medical field to come up and aid the doctors with better and quicker decisions. As a library that has the potential to solve problems like these, it is not surprising that TensorFlow has become one of the most used libraries for ML.

Sandeep Gupta, Project Manager of TensorFlow, briefly touched upon some of the main and most used TensorFlow modules and features in his first talk of the day. TensorFlow provides high-level APIs like Estimators, tf.data and the quite popular Keras API which make building machine learning models easy and fast. The saved TensorFlow Machine Learning models can be converted and deployed on mobile devices using TFLite. TF.js enables JavaScript developers to leverage Machine Learning right in the browser itself. Tensor2Tensor is a separate library that provides ready to use datasets and models. There were very useful talks later during the day that went into the details of some of these useful tools and libraries. While TensorFlow has more than 1600 contributors so far, most of them are from outside Google!

Using TensorFlow to Prototype and train models

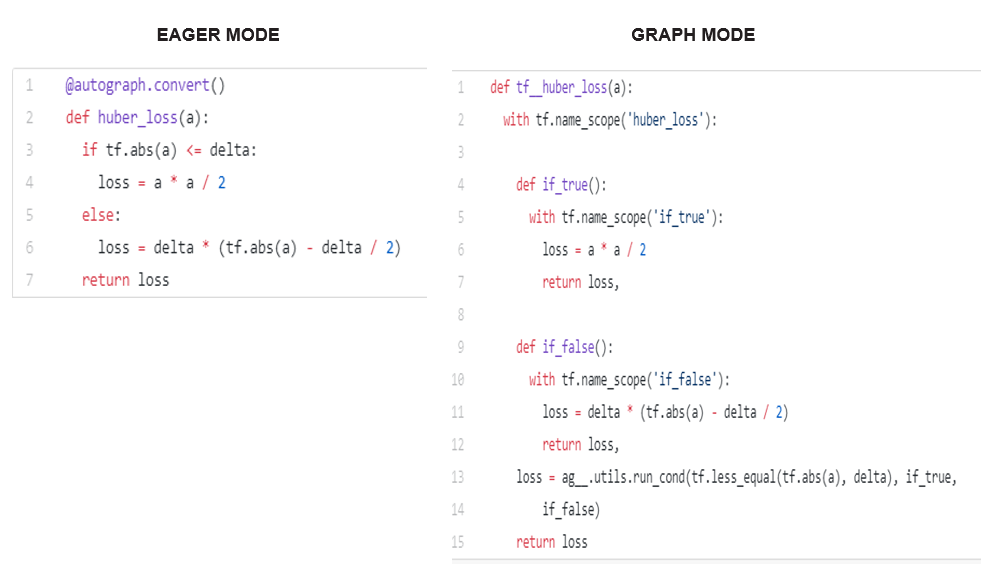

Building a Machine Learning model to solve a problem includes several steps, starting with data collection and till saving and deploying the trained model. Amit Patankar used a Heart Disease dataset to quickly take us through the different steps involved in building a Machine Learning model. While doing so, he also showed how Estimators make this process easy and better by providing different models that can be tried and evaluated. It was then time for Wolff Dobson to take the stage. In order to code and run anything in TensorFlow, one needs to first create a graph that contains all the necessary components and then run the graph from a session. Though this mode of execution has its own pros like better optimization, simplified distributed training and deployment etc, this approach can get a bit tedious and not really beginner friendly. The opposite of this is the Eager Execution mode where the execution happens immediately and no session is required. This sounds much better for beginners and researchers to prototype and code their models. But the trade-off here is losing the advantages that come with the former approach. Wouldnt it be nice if we can get the pros of both the approaches? That’s what AutoGraph does!

AutoGraph takes the code in eager mode and converts it into the graph equivalent. The above image is one example that shows how different the code looks in both modes and how useful AutoGraph could be! By using AutoGraph, one can prototype in eager mode and deploy in graph mode. Wolff Dobson also talked about the on-going work for TensorFlow 2.0 which will be releasing next year and asked the community to be involved in design decisions through RFCs(Request for Comment) process.

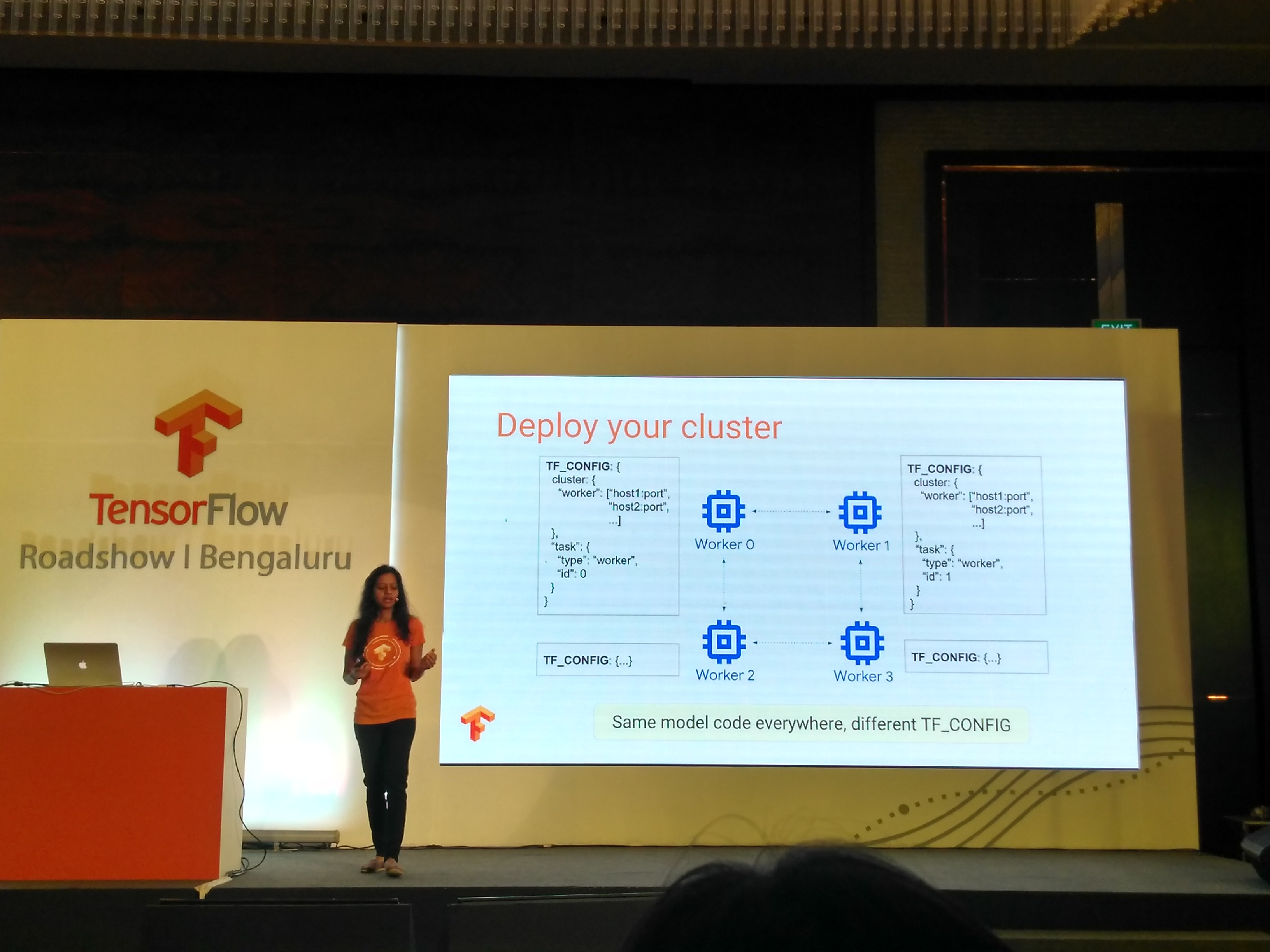

The next two talks were about Scaling with TensorFlow using Distributed Computation and Accelerators. Priya Gupta talked about Distributed TensorFlow. Depending on the dataset used, the architecture chosen and a few other things, training a Machine Learning model can take minutes to days or weeks of time. Distributed training helps in bringing down the training time by a huge magnitude when compared to nondistributed mode. In this mode, a cluster(a group of workers/processors) is used for graph execution rather than a single processing unit. This not only leads to lesser training time but also results in better performance and this can be facilitated with only minimal code changes. TPUs, which are TensorFlow Processing Units come with powerful computation capabilities. TPUs along with Distributed TensorFlow gives great results for building Deep Learning models. In his talk, Sourabh Bajaj explained how the computational demands of rapidly advancing Deep Learning methods led to the design and development of TPUs.

Distributed TensorFlow

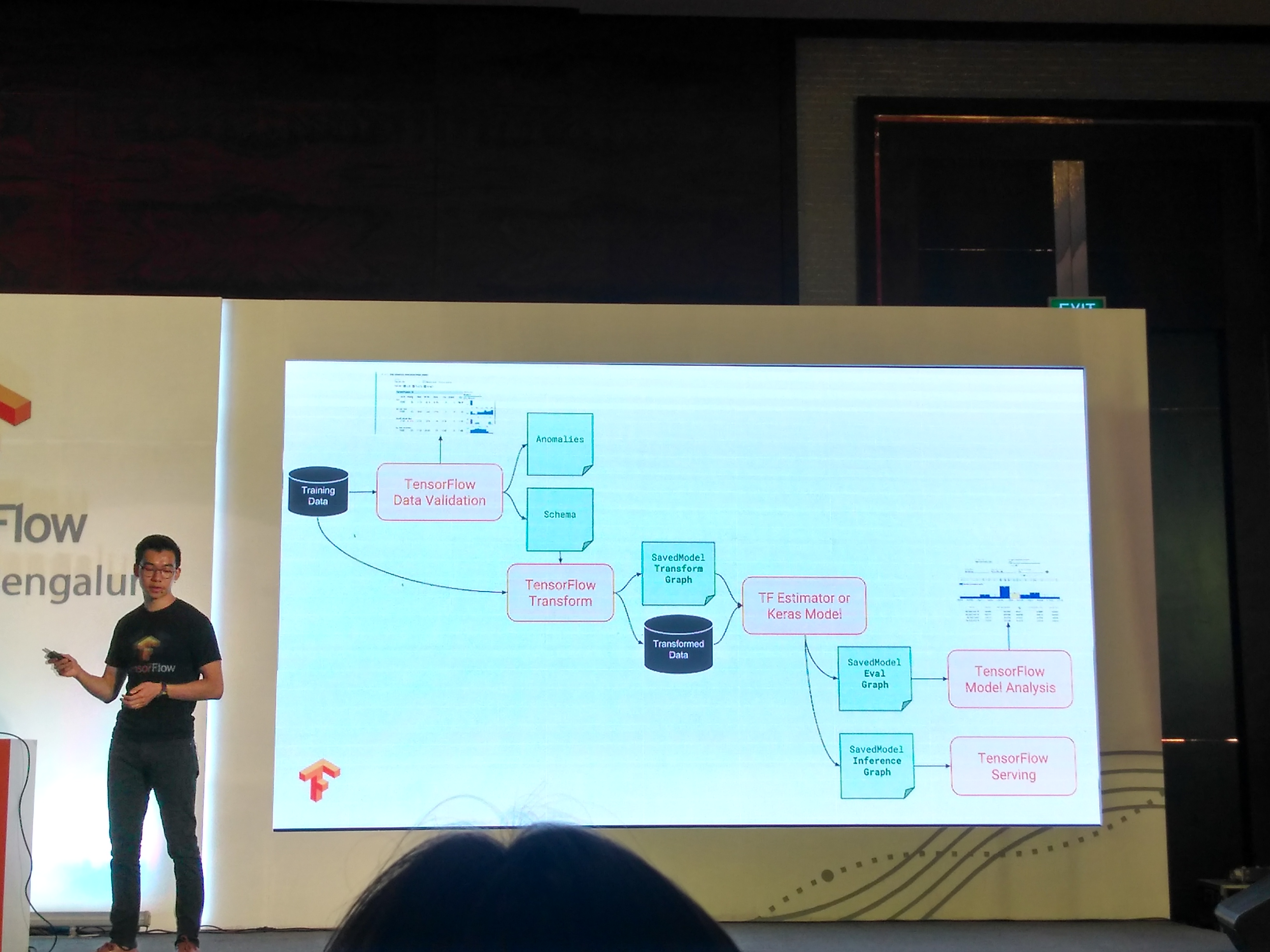

When building Machine Learning models, there are many things to consider and worry about than just the code. Collecting the data, making it ready for model training is a time taking process. Since the model performance and accuracy is highly dependant on the data used for training, it makes data validation and transformation highly critical. While on a high-level, a Machine Learning model takes data and learns from it to output a trained model that can be used for inference, taking this for production involves a lot of constraints and requirements. Instead of focusing only on building and deploying models, TensorFlow is growing its support for other parts of Machine Learning pipeline and TensorFlow Extended (TFX) does exactly that. Kenny Song explained through a series of slides what TFX is and what it does. TFX is an end to end ML platform that provides libraries to validate and analyze data at different stages of the training and serving, analyse the training model over different slices of data etc. He also talked about TF Hub which is a repository for reusable Machine Learning modules. Modules contain the TF graph along with its learned weights trained for some specific task. These modules can be used as is for the same task or can be reused with some modifications to solve similar other tasks.

TFX

Only a few years ago, not many people would have thought of having Machine Learning models trained and run from the browsers. But that has become a reality now, thanks to TensorFlow.js. Sandeep Gupta gave another talk on this where he shared how TensorFlow Playground paved the way for TensorFlow.js. Playground is an interactive neural network visualization tool which was built to run from the browser. The team that worked on developing this tool had to do a lot of work, as porting this Machine Learning tool to run from a browser involved some hurdles and constraints. While doing so, they realized the potential of bringing Machine Learning to browsers. Running ML models from browsers come with a lot of advantages — will require no drivers to be installed, it is interactive, well connected to sensors and the data stays with the user. All of these led to TensorFlow.js which is a JavaScript library for building and deploying ML models in browsers. For some cool ML projects which you can run on your browser, see the links at the end of the blog.

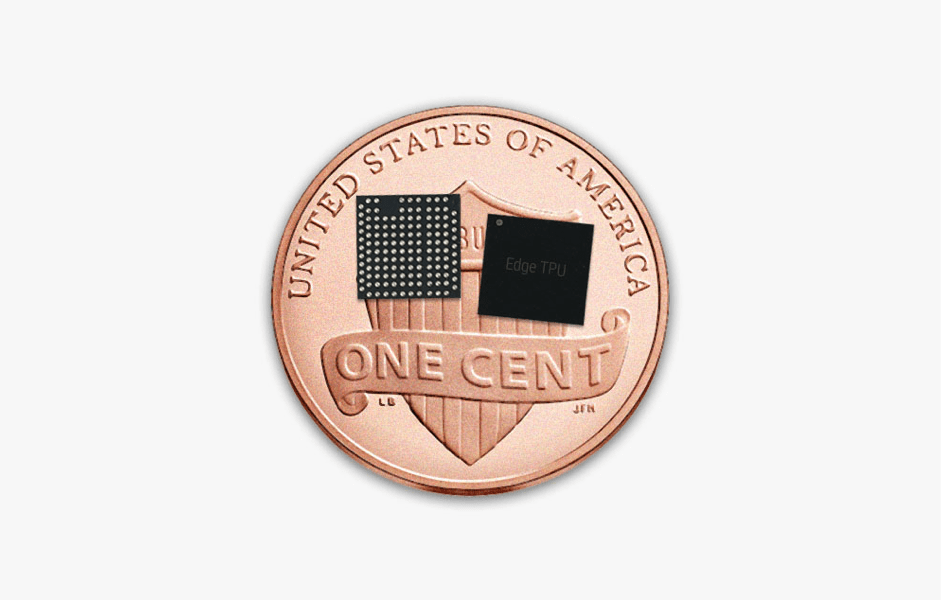

It’s not just enough if the models run efficiently on high-end devices. With mobiles being the most used devices, the quest to get ML models to run as efficiently as possible on low-end devices is also seeing some results. Andrew Selle, who is part of TensorFlow team as Staff Software Engineer took us through TensorFlow Lite which enables mobile and embedded devices to run ML models. Running ML models directly from the device has many advantages. Not having to contact any server for the inference, on-mobile ML leads to lower latency and doesn’t depend on data connection. The data, in this case, stays totally no device and hence avoids any privacy concerns. But it is a challenging task as mobiles and embedded devices come with tight memory, low energy and little computational power. TFLite has made this possible and is already being used in production by many applications. Edge TPU is a TPU-variant, developed for powering edge devices for ML.

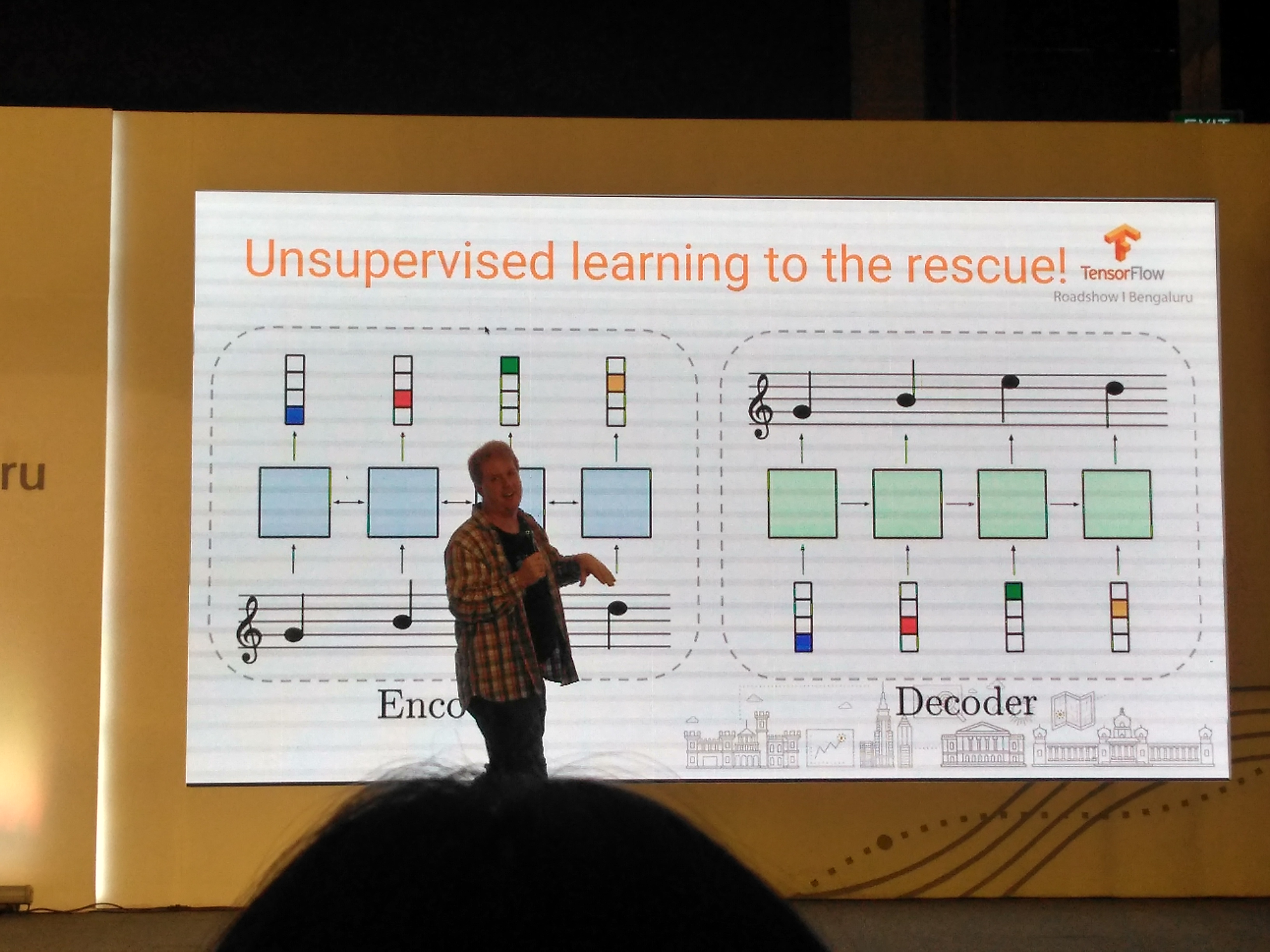

TensorFlow for Art and Music

With ML finding its place in almost every field, artists too have taken a plunge into it. Using ML to come up with some form of art has become a growing interest. ML is being used to come up with its own paintings, writings, music etc. Magenta is a TensorFlow project that is used for generating art and music using Machine Learning. It was Wolff Dobson who took the stage once again, for the last talk of what happened to be an amazing day. He showed us demos of some of the applications built using TensorFlow that play music and games.

It is an exciting time to be part of technology. Conferences like these have a lot to offer and it is amazing how much one can know and learn by attending community events like this. Besides the very useful talks, it was really nice meeting the TensorFlow team and other ML researchers. Thanks to the entire Google India team that has been organising many wonderful events like these for the community!

There are many resources which Google provides for free for anyone interested in ML, starting from beginner level crash courses to research level blogs. I wrote a blog on many such useful resources which you can find here - Google Knows How to Teach.

Did you find this post useful? Head over here and give it a clap(or two 😉 ). Thanks for reading 😄 😄

Leave a Comment