Google Smart Compose, Machine Bias, Racist AI - Summarising One Night of Binge Reading from Blogs

After Sundar Pichai took the stage and began his Google I/O 2018’s keynote, I started to take a note of interesting things that were being announced and demoed. There were some very interesting demos and announcements, especially for those who are into Deep Learning. I was curious about Gmail’s Smart Compose and Google Duplex besides other things, both of these being use cases of Natural Language Processing. Google Duplex got a lot of attention which was not surprising since the conversation made by Google Assistant with a real human sounded seamless and also human-like. If you are into Deep Learning, you would surely want to know at least a bit about how these are done and Google’s Research blog is a good place to know more. And just in case you didn’t know, ai.googleblog.com is Google’s new research blog!

We all are familiar with suggestions for word completion on phones. But why limit this feature to only word completion? Smart Compose is the new Gmail feature that helps in completing sentences by providing suggestions as we compose our emails. This too like many of the recent advancements and features, leverage neural networks. In a typical word completion task, the suggestive word is dependent on the prefix sequence of tokens/words. Depending on the previous words, the semantically closest word that could follow next is suggested. In the case of Smart Compose, in addition to the prefix sequence, the email header and also the previous email body(if present as in the case when the current mail is a response to an earlier mail) are considered as inputs to provide suggestions for sentence completion.

Latency, scalability and privacy are three identified challenges that should be addressed to make this feature efficient, accessible and safe. For the sentence completion suggestions to be effective, the latency should be really less so that the user doesn’t notice any delays. Considering that there are more than a billion people who use Gmail, it is quite hard to even imagine how different the context of each email would be. The model should be complex and scalable enough to accommodate as many users. Shouts of privacy are heard everywhere when any kind of data is involved. And there are only a few things that demand privacy more than our emails. The models, therefore, should not expose the users’ data in any way.

Google’s blog on Smart Compose had references to few research papers which were helpful for building the neural network architecture behind this new Gmail feature. There was also the paper Semantics derived automatically from language corpora necessarily contain human biases that was shared in the blog. It’s a very interesting paper and it led me to a series of blogs. Even if you are no AI researcher nor an expert in Machine Learning, you must have heard about how many aspects of our lives are vastly changing by the advancements in those fields. Privacy and bias are two big concerns surrounding the usage of applications or programs that learn from data. While privacy has been a concern for quite some time already, bias too seems to be catching up. Any program that leverages Machine Learning to solve a problem needs data as input. Depending on the problem or task at hand, this input data could be text, image, audio etc. Irrespective of what type/form the input data is, it is all generated through some human action. And that means the prejudices and biases that exist in human actions and thoughts are well part of the most important block of a learning model - its input.

When Latanya Sweeney from Harvard University searched on Google for her own name, to her surprise the results also had an ad that was showing,”Latanya Sweeney arrested?”This led her to a research on Google search results from which she concluded that the names which are associated more with the blacks are more likely to have such search results or advertisements. She found in her research after running the search for more than 2000 real names that the names associated with blacks were up to 25% more likely to show such criminal ads. Was Google search being racist here? This was the response that came from Google - “AdWords does not conduct any racial profiling. We also have an “anti” and violence policy which states that we will not allow ads that advocate against an organisation, person or group of people. It is up to individual advertisers to decide which keywords they want to choose to trigger their ads.”

In 2016, an internet user posted on Twitter, one of his experiences with Google image search. When he searched for “three white teenagers”, the results he found were images of smiling, happy white teenagers. When a similar search was tried with “three black teenagers”, the results were quite different. Though these results too had some normal and generic images, many of the other results were that of jailed teenagers. The post went viral soon after it was posted and that shouldn’t be surprising. What was the reason behind this? Was the algorithm behind the image search programmed to do this? This would surely not be the case. Google has explained this situation too - “Our image search results are a reflection of content from across the web, including the frequency with which types of images appear and the way they’re described online”.

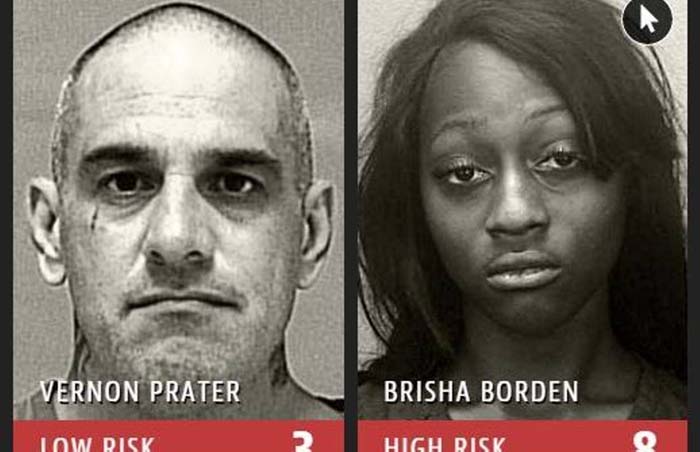

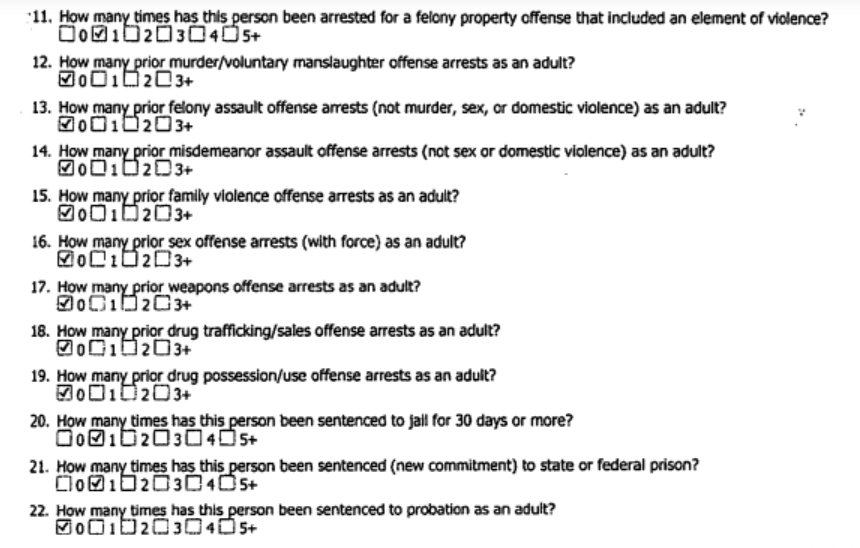

Does the problem seem to be only with the applications/algorithms of the big tech companies that have access to huge amount of data? Many courts across the United States use a software that gives the accused/defendant a risk score. This score represents the likelihood of the person committing a crime. From where does the software get the data to perform analysis and give out a risk score? According to ProPublica’s blog, Northpointe the company which has developed such a risk-assessing software uses the answers to 137 questions as the data. These answers are either directly obtained by questioning the accused or taken from the past criminal records.

The data, in this case, may not be as much but that doesn’t mean the program is free from bias. When Brisha Borden took a ride on a bicycle which was not hers and got arrested, she was given a high score by the risk assessment program that she was likely to commit more crimes in future. The same program has given a lower score to Vernon Peter who served 5 years in prison for armed robbery in the past. Yet in two years time, Brisha who was given a high score hasn’t committed any crimes whereas Vernon who had a low score for committing any future crime, was back in jail for another robbery. Data again is the most key component of this risk assessment program.

These are not simply some handpicked incidents for the purpose of explaining the problem of bias. Years ago there was an incident in which HP’s face tracking webcam was working fine with white people whereas it failed to recognize the dark-skinned people. Google Photos was classifying the blacks as gorillas. The root cause of all these is that bias is very much part of the data that is being used. This can happen either when there is bias in the data generated and also when the data collected to train the models is not representative. Not all people will laugh it off when some application on their phone or a website classifies them or a friend of theirs as some animal. No company working on algorithms and applications that run on data will want them to act biased. Many of us are well aware that the algorithms being used for these purposes are far from perfect. Yet the way people react when they experience these biases will be spontaneous as if they were subjected to it by a fellow person.

Latanya Sweeney’s incident happened in 2013. Similarly, the other incidents that were mentioned, happened sometime during the years 2014, 2016 and HP’s face tracking issue happened way back in 2009. Given that the incidents we are talking about happened years ago, these problems with the bias should have been possibly fixed by now, isn’t it? No. Google fixed the Photos app problem by blocking any of the images in the app from being categorized as gorillas, chimpanzee etc. Sounds more like a workaround than a fix? But the problem we have here is that these are biases, not bugs. They don’t exist in the code. They exist in data and that’s what makes this a really hard problem to fix.

The learning models are not perfect. And the companies that are developing and building those are not pretending they are either. Google doesn’t hesitate to acknowledge how even its state of the art algorithms are vulnerable to the bias in data. It continuously works on making these algorithms better. Apple has learnt from all the flaws and problems faced by prior face recognition software. It made sure its face recognition program built using neural networks is trained on proper representative data - data that was inclusive of all diversities like age, gender, race, ethnicity etc. The leading tech companies and many others are making strides towards improving these algorithms which surely have a lot of potential for good like early detection and diagnosis of diseases, aiding the differently abled, education, making computers and technology better at performing human-like tasks etc.

Though these advancements have happened rapidly in the past few years, problems like algorithmic bias still exist. Since the learning models are helpless if bias exists in the data on which they are trained, developing learning models that not only learn associative patterns but also learn to implicitly handle bias when it exists will be a big step. We as humans are so thought driven. Yet even with biased thoughts, we have the power to act unbiased if we choose to. Can the learning models learn to do this? If not exactly, at least to some extent?

Thanks for reading 😄 😄

Leave a Comment